SpecMER: Fast Protein Generation with K-mer Guided Speculative Decoding

Thomas A. Walton, Darin Tsui, Aryan Musharaf, Amirali Aghazadeh. SpecMER: Fast Protein Generation with K-mer Guided Speculative Decoding. 39th Conference on Neural Information Processing Systems (NeurIPS 2025). Accepted, Spotlight (top 3% of submissions).

Abstract

Autoregressive models have transformed protein engineering by enabling the generation of novel protein sequences beyond those found in nature. However, their sequential inference introduces significant latency, limiting their utility in high-throughput protein screening. Speculative decoding accelerates generation by employing a lightweight draft model to sample tokens, which a larger target model then verifies and refines. Yet, in protein sequence generation, draft models are typically agnostic to the structural and functional constraints of the target protein, leading to biologically implausible outputs and a shift in the likelihood distribution of generated sequences. We introduce SpecMER (Speculative Decoding via k-mer Guidance), a novel framework that incorporates biological, structural, and functional priors using k-mer motifs extracted from multiple sequence alignments. By scoring candidate sequences in parallel and selecting those most consistent with known biological patterns, SpecMER significantly improves sequence plausibility while retaining the efficiency of speculative decoding. SpecMER achieves 24–32% speedup over standard autoregressive decoding, along with higher acceptance rates and improved sequence likelihoods.

GOLF: A Generative AI Framework for Pathogenicity Prediction of Myocilin OLF Variants

Thomas A. Walton, Darin Tsui, Lauren Fogle, Dustin Huard, Rafael Siqueria Chagas, Raquel Lieberman, Amirali Aghazadeh. GOLF: A Generative AI Framework for Pathogenicity Prediction of Myocilin OLF Variants. Machine Learning in Computational Biology (MLCB 2025). PMLR (coming soon).

Abstract

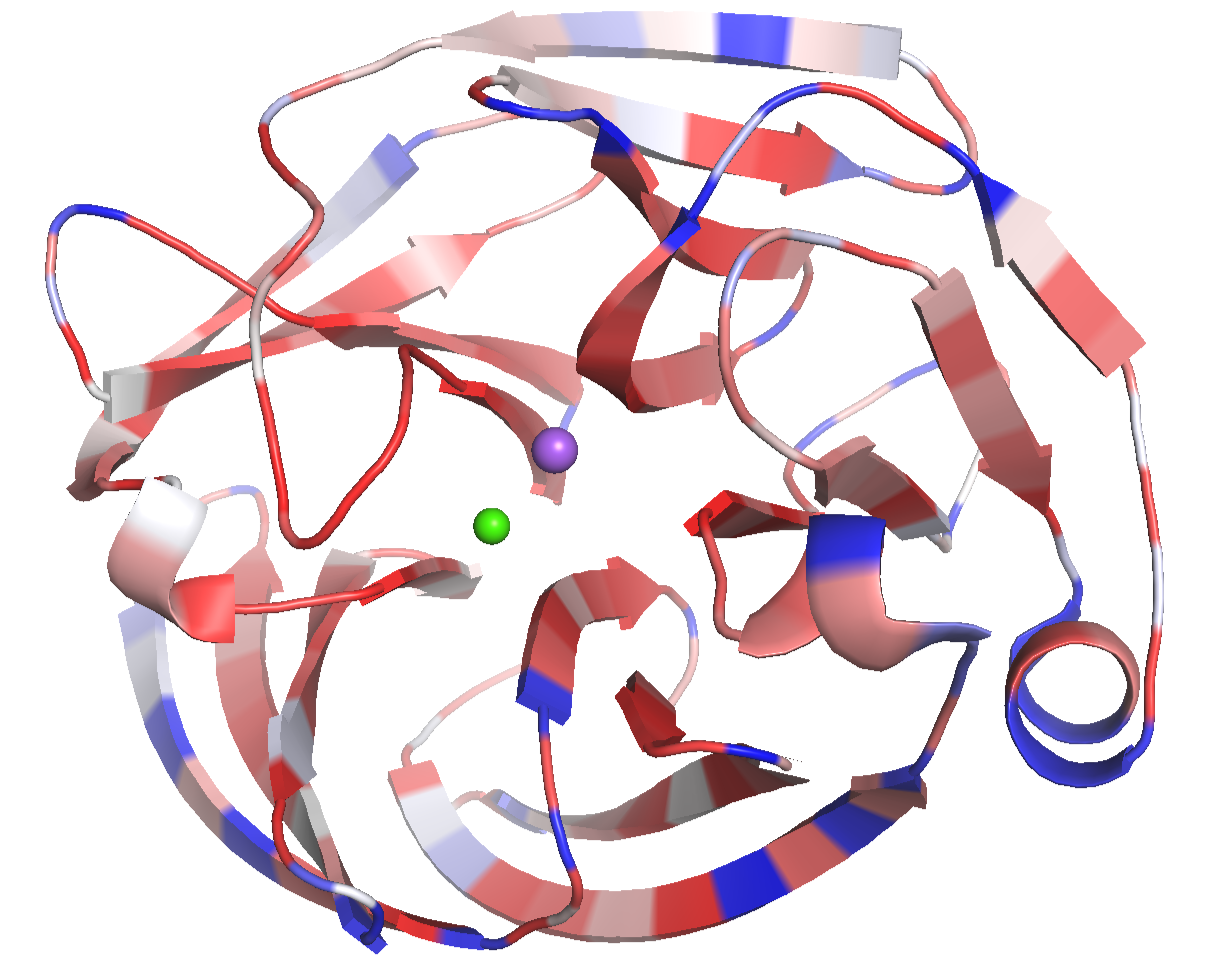

Missense mutations in the MYOC gene, particularly those affecting the olfactomedin (OLF) domain of the myocilin protein, can be causal for open-angle glaucoma—a leading cause of irre-versible blindness. However, predicting the pathogenicity of these mutations remains challenging due to the complex effects of toxic gain-of-function variants and the scarcity of labeled clinical data. Herein, we present GOLF, a generative AI framework for assessing and explaining the pathogenicity of OLF domain variants. GOLF collects and curates a comprehensive dataset of OLF homologs and trains generative models that predict the effect of monoallelic missense mutations. While these models exhibit diverse predictive behaviors, they collectively achieve accurate classification of known pathogenic and benign variants. To interpret their decision mechanisms, GOLF uses a sparse autoencoder (SAE) that reveals the underlying biochemical features exploited by the generative models to predict variant effects. GOLF enables accurate evaluation of disease-causing mutations, supporting early genetic risk stratification for glaucoma and facilitating interpretable investigations into the molecular basis of pathogenic variants.

Machine Learning Analysis of Mass Spectrometry Data Can Differentiate Organic Distributions in Meteoric and Terrestrial Geologic Samples

D. K. Buckner, D. Saeedi, T. Walton, J. C. Aponte, A. Aghazadeh. Machine Learning Analysis of Mass Spectrometry Data Can Differentiate Organic Distributions in Meteoric and Terrestrial Geologic Samples. 56th Lunar and Planetary Sciences Conference (LPSC).

Abstract

We investigated differences in organic distributions in a suite of 18 samples representative of two distinct pools: meteorites containing organics synthesized through abiotic processes and terrestrial materials containing organics formed by ancient or extant life. 2-D GC× GC-HRTOF-MS data of non-and semi-polar organics extracted from these samples was used to train an ML algorithm called LifeTracer to assess differences between abiotic and biotic samples.

A Markovian Error Model for False Negatives in DNN-based Perceptron-Driven Control Systems

Kruttidipta Samal, Thomas Walton, Hoang-Dung Tran, Marilyn Wolf. 2022. A Markovian Error Model for False Negatives in DNN-based Perceptron-Driven Control Systems. International Neural Network Society Workshop on Deep Learning Innovations and Applications (INNS DLIA 2023). IEEE.

Abstract

This paper presents an improved Markovian error model for Deep Neural Network (DNN) based perception in autonomous vehicles and other perception-driven control systems. Many modern autonomous systems rely on DNN-driven perception-based control/planning methodologies such as autonomous navigation, where the perception errors significantly affect the control/planning performance and the systems’ safety. The traditional independent, identically-distributed (IID) perception error model is inadequate for perception-based control/planning applications because image sequences supplied to a DNN-based perception module are not independent in the real world. Based on this observation, we develop a novel Markov model to describe the error behavior of a DNN perception model—an error in one frame is likely to signal errors in successive frames, effectively reducing sample rate for the control command. We evaluate the effect of Markovian perception errors on a drone-control simulator and show that the Markovian error model provides a better estimate of control performance than does a traditional independent, identically-distributed (IID) model.

Under the supervision of Dr. Mohammad R. Hasan, I investigated the impact of algorithmic bias in state of the art CNNs. Using ScoreCAM, a method of interpreting the gradients of a neural network in a meaningful way, we were able to address this bias. Our findings proved that deep beliefs held by networks like MobileNetV3 and ResNet-50 are fundamentally flawed. By artificially injecting confusing objects into images of flowers, weaknesses in these networks became apparent. While Deep Learning is a fast progressing field, there are still many questions to be answered about the inner workings of these networks. This research exposes a weakness in the current paradigm of the field, opening up new opportunities to make networks even better.